Today is the day that Aaron Burr shot Alexander Hamilton. You don’t get to choose who lives, who dies, who tells your story.

Monthly Archives: July 2016

Waffles, our wee 6-pound cat, stalked a turkey. Sh…

Status

Waffles, our wee 6-pound cat, stalked a turkey. She insists it cheated: just when she had it, it flew into a tree. https://t.co/6bssRSzfmE

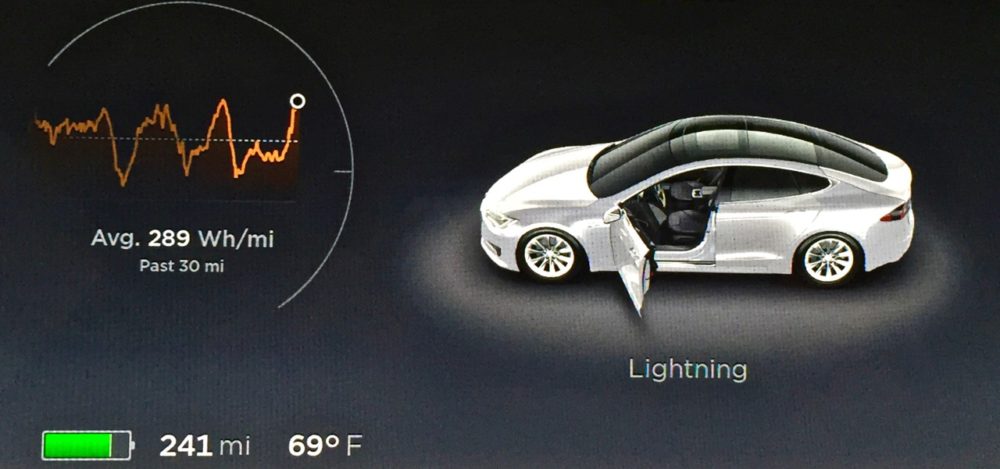

New plates and a new transponder

Odometer: 1,349

I haven’t taken any trips lately, so I haven’t had much to write about in Driving Lightning. That said, Lightning is settling into our household nicely. Recent milestones include:

- A new EZ-Pass transponder. The Massachusetts EZ-Pass office in Boston wouldn’t replace my 12-year-old FastLane transponder until it registered as “failed” on its tester. However, the Natick office on the Mass Pike recognized that 11-year-old batteries probably weren’t going to cut it with the Tesla’s windshield attenuation, and gave me one of the new transponders. I mounted it on the windshield, and so far it has been read by the Mass Pike toll readers realiably.

- My new ham radio plates. I received notification in June that my ham radio plates were delivered to the RMV, so I traded in my old plates for my new ones as shown. I’m thrilled with both the short license code (I can now actually remember my license plate when asked!) and the all-important lightning bolt logo for Lightning.

I do have a couple of minor issues that I’m going to have the service center address July 11: I get some resonant buzzing from the AC unit at low speed, and there’s a leather defect on one of the rear seats. Overall, though, Lightning is fully operational now. I’ve driven it into Boston, to the grocery, to Geeks Who Drink (Tuesday night Trivia in Waltham), and it is performing flawlessly. I’m seeing better efficiency (i.e., fewer watts per mile) than the car itself predicts, and that means more battery range than advertised.

Because I’m not driving much, I’m continuing to schedule my battery charging during peak solar power generation times. So on those rare occasions when I am driving my Tesla, I really am driving on sunshine.

My long-winded opinion: autopilot didn’t cause the…

Status

My long-winded opinion: autopilot didn’t cause the Tesla accident; it was a DWI: Driving While Irresponsible. carlhowe.com/blog/autopilot…

Autopilot didn’t cause the Tesla accident; it was a DWI: Driving While Irresponsible

The media has written many dramatic accounts of the May 7 accident involving a Tesla’s collision with a tractor trailer. Some read as if Elon Musk, Tesla and autopilot technology caused the death of the driver. As someone who has both flown as a pilot using autopilots and as the owner of a Tesla with autopilot functions, I don’t think this is accurate. I’m hoping that this response will provide some balance in the discussion.

Based on my experience with Teslas, aircraft, and technology analysis in general, I assert the following:

- The accident was caused by driver negligence, pure and simple

- Even including this fatality, data on autopilot use indicates it is safer than manual driving.

- Legal precedents already exist for how autopilots can and should be used

- The issues regarding autonomous self-driving cars (as opposed to autopilots) will take at least a decade to be resolved.

With that as preface, let’s take the arguments one by one.

1. The accident was caused by driver negligence, pure and simple.

First, autopilots (the technology the Tesla was equipped with) and autonomous self-driving vehicles (think of those Google self-driving cars) are not the same thing. The U.S. National Highway Traffic Safety Administration (NHTSA) defines four levels of vehicle automation, each with differing requirements. The Model S Tesla autopilot involved in the accident was a Level 2 system, which the NHTSA defines as:

[A level 2 system] involves automation of at least two primary control functions designed to work in unison to relieve the driver of control of those functions. An example of combined functions enabling a Level 2 system is adaptive cruise control in combination with lane centering.

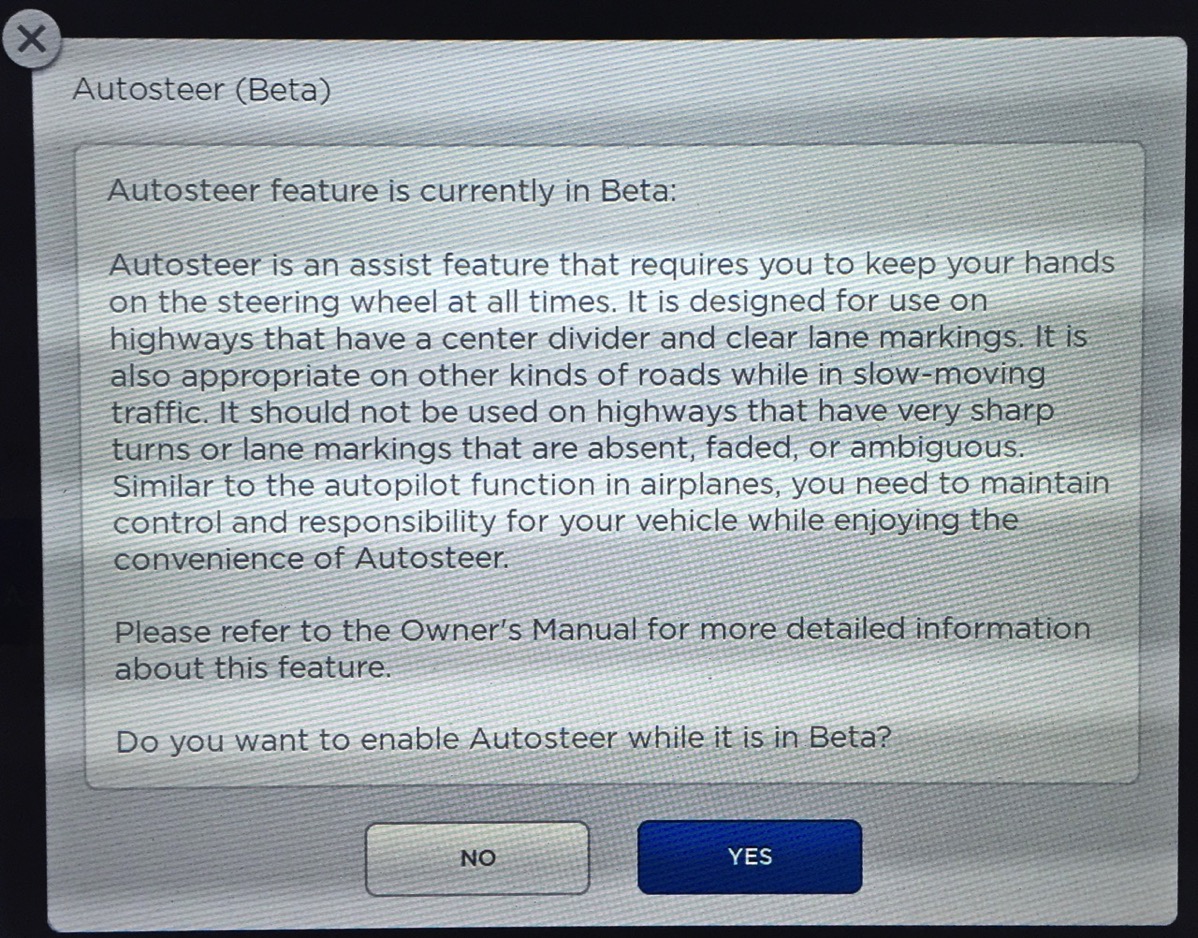

The driver was notified of the limitations of the Tesla autopilot when he first accepted the risks of using the beta autopilot. as shown in the screen shot at the top of this article. Further, every time he invoked the auto-steering function on his car, he was again reminded by a notice on the dashboard that he was to keep his hands on the wheel, retain control of the car, and to be prepared to take over at any time. The fact that the driver then saw fit to watch a movie instead of the road was just as negligent in an autopilot-powered car as it would have been on one where there was no autopilot. He did not avoid an obstacle when driving his car, and that caused an accident. If the driver had lived, it seems likely that he would have been found negligent in a court of law simply because the law requires drivers to be in control of their vehicles at all times, regardless of what bells and whistles they may have bought.

Bottom line: the driver was negligent, not the autopilot.

All the arguments you hear about autonomous self-driving cars are about level 4 systems, which we’ll define and discuss in the fourth section.

2. Even including this fatality, what data we have on autopilot use indicates it is safer than manual driving

As the Tesla team notes in their blog, the average fatality rate for US drivers is approximately one fatality every 94 million miles. The latest data for 2015 from the NHTSA shows that U.S. fatalities actually increased in 2015 to one fatality every 89 million miles.

Despite the Tesla driver’s poor judgment, the accident reported in May is the first fatality reported in 130 million miles of autopilot-enabled driving; in fact, it is one of only a very few fatalities ever to occur in a Tesla. Just based on these statistics, fatalities in a Tesla on autopilot occur at 68% of the rate in ordinary, non-autopilot-equipped cars.

3. Legal precedents already exist for how autopilots can and should be used

The first autopilot on an aircraft was demonstrated by Lawrence Sperry in 1914 on the banks of the Seine in 1914. Seemingly foreshadowing this recent Tesla incident, Sperry left the cockpit and allowed the aircraft to fly itself past the grandstands with no pilot at the controls in what was probably the first example of irresponsible use of an autopilot.

Since that time, literally billions of passenger miles have been flown under autopilot control. A 777 today can fly itself from a runway at New York’s JFK and land itself at a category IIIc runway at London Heathrow without the pilot ever being involved or the passengers ever knowing. Today’s autopilots are so good that pilots often have to take extraordinary measures to avoid falling asleep in the cockpit. Suffice it to say that aircraft autopilots are decades ahead of autopilots in automobiles, and they use equipment that is orders of magnitude more precise and costly.

Despite the capabilities of today’s aircraft autopilots, though, aviation law is quite clear: aircraft must have a pilot in command, and that pilot is ultimately and legally responsible for the operation of the aircraft. It is his or her responsibility to decide when to use the autopilot and whether it is safe to do so. The pilot in command is also responsible for understanding the limitations and possible failures of autopilot hardware and to be prepared to override the autopilot at any time.

In short, the legal buck always stops with the pilot, not the aircraft or the autopilot manufacturer, regardless of how good or bad those products may be. If that’s the case for autopilots that costs hundreds of thousands of dollars, it seems unlikely that we would absolve drivers of all driving liability when they rely on new systems that cost a few thousand.

4. The issues regarding autonomous self-driving cars (as opposed to autopilots) will take at least a decade to be resolved.

As noted in my first argument, despite being the most advanced car autopilot made today, Tesla’s autopilot is a level 2 system that is only designed to relieve the driver of some tedious tasks; it is not a fully autonomous level 4 self-driving system such as those being tested by Google and Audi. The NHTSA defines a level 4 autonomous system as:

[In a level 4 system,] The vehicle is designed to perform all safety-critical driving functions and monitor roadway conditions for an entire trip. Such a design anticipates that the driver will provide destination or navigation input, but is not expected to be available for control at any time during the trip. This includes both occupied and unoccupied vehicles.

Startups, automakers, venture capitalists, and the media have been breathless in their excitement about autonomous vehicles. Many seem to hoping for a world where they can get smashed at parties, summon their vehicles to take them home, and to be comforted by the knowledge that technology will get them home safely. It’s the ultimate Silicon Valley dream and promises to be worth billions.

I assert that the dream of level 4 autonomous vehicles will likely turn into an ethical and legal nightmare. The problem is not one of technology, but of philosophy and ethics. A self-driving car sounds innocuous, but in reality, is a software-controlled two-ton object that can’t overcome the laws of physics. A two-ton vehicle traveling at highway speeds has plenty of momentum to kill.

That claim may be hyperbolic, but consider the following scenario: An autonomous vehicle carrying two passengers is traveling the speed limit on a two-lane road with a car coming from the other direction. A child dashes out from behind a tree in front of the autonomous vehicle so close that it cannot stop. If the car swerves to the right, it will likely hit the tree and kill the occupants. If the car swerves to the left, it will have a head-on collision with the oncoming car and will likely kill the occupants. Even if it brakes perfectly, it will still kill the child. What is the correct course of action for the car, who is responsible for the inevitable deaths, and, more importantly, will any of those actions be acceptable to society?

If you find this scenario too outlandish, how about this simpler all-too-familiar one to the tech world: A consumer tasks an autonomous vehicle to take him or her to work on the 70 mph freeway. Enroute, the autonomous software stops responding, and the car plows into several others, creating a multi-car accident. Who is responsible and pays for the damages: the consumer, the auto manufacturer, the autopilot programmer, or all of the above?

Finally, let’s consider a more nefarious use of automobile autonomy: a terrorist loads up an autonomous vehicle with explosives, sets its destination for some government building, and then gets out of the car. Will society accept this scenario as an acceptable risk of self-driving vehicles?

I believe that the legal system and our courts will eventually sort out the grim implications of fully autonomous vehicles, but I don’t think this will happen quickly or easily. Further, with billions of dollars in R&D and product liability at stake, many of these issues will need to be resolved either legislatively or by the Supreme Court before they are settled law. Perhaps the industry will ultimately come up with the equivalent of Isaac Azimov’s Three Laws of Robotics to govern autonomous vehicles; after all, an autonomous vehicle is simply a robot in the shape of a car. Regardless, I predict that it will be at least one decade and probably several before the law and society come to grips with these issues in any understandable and enforceable way.

In the meantime, though, autopilots such as Tesla’s will show up in more vehicles over time. In the absence of settled law, motor vehicle regulators and insurers are most likely to fall back on the only precedent they have: the driver is ultimately responsible for safely operating the car and autopilot, just as pilots are in aircraft.

Yes, we will see more fatalities when people use those tools irresponsibly. And while we may aspire to create autonomous technology that is near-foolproof, mother nature will always create better fools.

Which are greener: solar panels or electric cars?

A friend recently forwarded on a good question:

Which creates a more positive environmental impact?

- Adding a 10kW PV system to one’s home?

- Buying an EV in place of a 35mpg gas-powered car?

For extra credit, which offers the most environmental savings per dollar spent?

Fortunately, some data answers this question pretty quickly.

We’ve had solar panels on our house for just about 30 months now. According to our solar monitoring service, the 31,673 kWh we’ve generated from solar are the equivalent of 2,797 gallons of gasoline.

When I was driving my Mercedes, I was consuming about 30 gallons of premium gasoline a month. Over 30 months, that adds up to 900 gallons of gasoline consumed. While an electric vehicle would save that 900 gallons of gasoline with no additional emissions, the solar panel array created more green benefit by a factor of about 3. Yes, a 35 mpg car would burn less fuel than the Mercedes did, but that just makes the electric vehicle savings worse, not better.

Solar panels also produce power in the summer time when the power grid is at peak load. As such, one can argue that their benefit is actually greater than the numbers would suggest because they reduce the need for the power utility to buy high-emissions natural gas or coal-based power to satisfy peak demand. We already had an ultra-low emissions vehicle, so despite the fact that the Mercedes burned gasoline, it was a less harmful polluter than many of the peak demand power plants.

The question of which system generates more environmental savings per dollar is even more clear. Our 9 kW solar array was about $42,000 before the 30% federal tax rebate, which brought its cost down to $29,400. The Tesla was more than double that price and only gets a total of $8,500 in tax rebates (net cost just about $100,000, although one could argue that we could do without about $10K in the fancy extras to achieve a better green quotient).

Bottom line: The solar panels create about 3 times the green benefit of the electric car if we ignore cost. On a per-dollar basis, the benefit of the solar panels is about 10 times that of the electric car. So if you are considering which green project to do first, installing the solar panels first should be your priority (assuming your house is a suitable site for solar; not all houses are).